Spark Ecosystem

Show More Show Less View Video Transcript

0:00

In this video, we are discussing Spark ecosystem. Spark ecosystem is having mainly six components

0:07

We'll be discussing all of them one by one with one proper diagram

0:11

So, let us go for this Spark ecosystem. Now, this is our Apache Spark ecosystem

0:17

It is having mainly six components. So, that is the SparkSQL, Spark Streaming, then the MLL, that is our machine learning libraries

0:27

And then Graph X, that is for the Spark. a graph related computations and Spark R. And there is another one, there is an Apache Spark

0:36

Core API. This Apache SparkCode API is consisting of R, SQL, Python, Scala, and Java. So while

0:45

developing Spark applications, we can select any one of the languages as required as according

0:51

to our choice. So there are six components are there in Spark ecosystem. So we shall be discussing

0:57

each one of them one by one. So, what is the Spark ecosystem? In this diagram, we can see that

1:04

six components of Spark ecosystem, and these components are Spark Core, and then Spark SQL

1:11

we're having the Spark Stream, we're having the respective ML lib, that is the machine learning

1:17

library, we're having the Graph X for the graph-related computations, and also the Spark

1:22

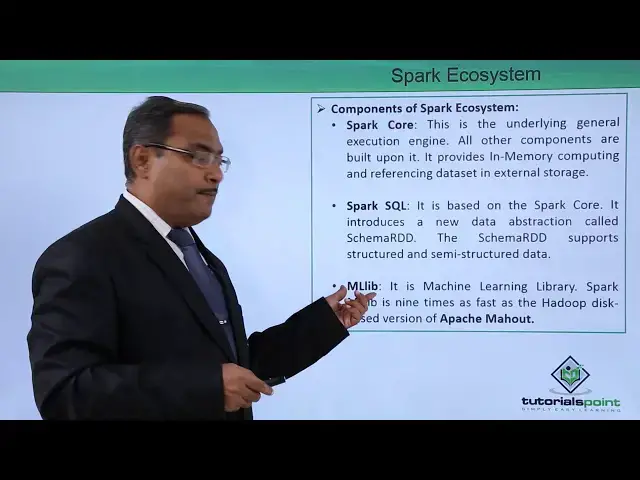

R So let us go one by one at a time So components of Spark ecosystem the first component is the Spark core So this is the underlying general execution engine and all other components are built upon it

1:38

So, this is the general execution engine and other five components, they are depending on this Spark core

1:45

And it provides in-memory computing and referencing data set in external storage

1:51

So, it can do the referencing from the external storage, but always it does the in-memory

1:57

computation and that's why it is faster and lightning first. Next, we're going for the SparkSQL

2:05

So it is based on SparkCore and it introduces a new data obstruction, a new data

2:12

abstraction called schema RDD. We know that RDD is having the full form that is a resilient distributed data set

2:20

It is nothing but one data structure with the help of which one data set or all the data sets

2:26

residing in the RDD can be distributed onto multiple nodes and server

2:31

And that is the main feature of RDD. So here it is having this one that it is based on SparkCode and it introduces a new data

2:40

obstruction that is known as schema RDD. The schema RDD supports the structured and semi-structured data

2:47

So structured data can be considered as the database. tables that is a relational management database tables can be considered as a structure type of data and semi data might be in the form of say CSB might be in the form of say XML and JSON

3:03

So next one we are going for this ML Lib, that is a machine learning library

3:07

So it is machine learning library and Spark ML LEB is nine times as fast as a Hadoop disk-based

3:16

version of Apache Mahat. So compared to the Hadoop disk-based version, for the machine learning operations or to develop the machine learning related applications from this Apache Mahat this particular ML lib is nine times faster

3:33

Next we are going for this spark streaming and spark streaming is leveraging the spark course for scheduling capability to perform streaming ytics

3:44

So for the streaming ytics, this particular spark streaming will be used here and it ingests data in

3:51

mini batches and perform odd d transformations on those mini batches of data so it will take the data

3:59

in the mini batches and then it will perform the rddd transformations on those mini batches of data

4:08

next we are going for this graph x which is capable to do the graph related computations so this is

4:14

a distributed graph processing framework and it provides an API for expressing graph computations that can model the user defined graphs by using the pre abstraction API So there is a very important one So pre abstraction API

4:32

So it provides an API for expressing graph computations. Whenever we are having graph-related competitions are required, always you'll be referring

4:40

with this particular component in the Spark ecosystem that is a graph X and that can model

4:45

user-defined graphs by using pre-gill abstraction API. it also provides an optimized runtime for this abstraction

4:54

Next one, we are having this one as spark R. So it is introduced in Apache Spark 1.4

5:02

So from the Apache Spark 1.4 version onwards, we are getting this Spark R

5:07

And this SparkR data frame is the key components and it is a fundamental data structure

5:12

for R programming language. In the Spark code, we have the option to write our Spark related applications or develop

5:19

about spark related applications using the R language and this spark art is a data frame is a key

5:25

component and it is a fundamental data structure which is required for the R programming language

5:31

R also provides different facilities for data manipulation, calculation and also the graphical

5:38

display. So these are the six different components we have explained them with the proper diagram

5:43

and explanation and we will be working on them, we will be discussing all these concepts later on

5:48

also in our other videos please watch all of them and thanks for watching this one

#Programming