RDD in Spark

Show More Show Less View Video Transcript

0:00

In this video we are discussing RDD in Spark

0:03

RD stands for resilient distributed data set. It is one data structure with the help of which all the data sets which will be

0:12

deciding in this RDD will be distributed onto multiple different servers and nodes

0:18

So whenever computations are required, the competitions can be done simultaneously in parallel

0:23

on multiple different servers and nodes where these data sets are available

0:28

So, let us go for more discussion on this RDD in Spark

0:33

So, what is RDD in Spark? RDD stands for resilient distributed dataset

0:41

It is the basic data structure of Apache Spark. And each and every data set in RDD is logically partitioned across different servers

0:52

And using this partition's different nodes and clusters can do the competition in parallel

0:57

at the same time. And that's why RDD is faster in our operations. So, RDD is an immutable

1:05

collection of objects So it is immutable collection of objects So RDD are resilient so they are fault tolerant So as a result of that it uses the DAG and using DAG it is able to recompute missing partitions if there is any and it is

1:24

distributed because data can be stored on multiple different nodes or servers and finally RDD is a data set so the user can load data set externally

1:35

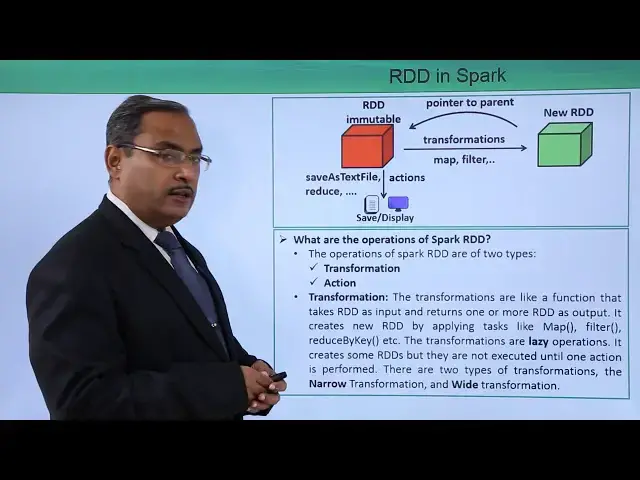

using the CSV files or JSON files according to the recommend availability now consider this very diagram so here actually we are getting two

1:47

main operations one is the transformations another one is the actions to two main

1:52

operations are there one is the transformations and another one is the actions

1:56

now what is the basic difference between these transformations and actions in

2:00

case of transformations will be getting another new odd dd from the previous one

2:05

but in case of actions no separate RDD will be created from the previous one. So, that is the main difference between the transformations and actions

2:15

So at first we are going for these two different operations into details

2:20

So what are the operations of SparkRDD The operations of SparkRDD are of two types So the first one is the transformation and the next one is the action So transformation The transformations are like a function that takes RDD as input and returns

2:40

one or more RDD as output. Here you can have multiple different methods we can execute here

2:47

So it creates a new RDD by applying tasks like our map method, filter method, reduce by key method, etc

2:56

it will be creating multiple new oddidies from the parent one the transformations are lazy

3:03

operations that means it will not get pre-computed and it creates some odddids but they are not executed

3:11

until one action is performed so until the actions are getting performed no new art ds are going

3:18

to get created so that's why it is known as lazy operation so there are two types of transformations

3:24

are possible and they are the narrow transformation and the white transformation

3:30

So that is about the required discussion on transformation. So let us go for the actions

3:36

Now we are discussing action here. The final result of RDT competition is the action And it uses the DAG to execute the tasks and at first it loads the data into the original RDD and then performs all intermediate transformations jobs and finally returns to the driver program So it is not going to create any kind of new RDD at the end of the actions Now what are the different actions we can carry out Some actions in Spark are first method or say take method

4:12

reduce method, collect method and count method, etc. Using transformation, we can create

4:19

RDD from another existing RDD. In the previous diagram also we have shown that using

4:24

transformations, the new RDD or multiple new RDs will be created from the mother one

4:31

So using transformations, we can create RDD from another existing RDD to work with the actual

4:38

data set, we should use the actions. And during the execution of actions, it does not create any new RDD, so its outcome can be stored onto the

4:48

save, onto certain files or display that one on the console. So that is our RDD in Spark. So we have

4:56

discussed this one with some discussions and with one proper diagram. Thanks for watching this video

#Data Management

#Programming

#Programming

#Software

#Computer Science