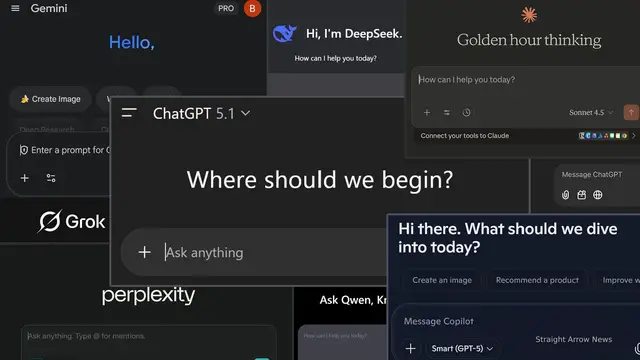

AI tools like Google Gemini and other chatbots are rapidly reshaping how people access information, but they can also be unreliable.

Show More Show Less View Video Transcript

0:00

AI search engines and chatbots keep rolling out new updates

0:04

with companies claiming they're more helpful and more powerful than ever. Google released its Gemini 3 Pro this week, the latest update to its artificial intelligence

0:14

system. Microsoft's CoPilot chat is expanding its integration into Outlook, Word, and other

0:21

Microsoft programs. And OpenAI recently launched its own search engine, Atlas, powered by its

0:28

AI Engine Chat GPT. With so many ways to search the web, what source do you turn to for news

0:36

If you're relying on an AI-powered chatbot or search engine, then this story is for you

0:45

Jeffrey Blevins is a professor at University of Cincinnati and a faculty fellow of the Center

0:51

for Cyber Strategy and Policy. He spoke with Straight Arrow News about using artificial

0:56

intelligence sources or artificial algorithms, as he likes to call it. I've never been comfortable with the term intelligence, right? Artificial, I'm good with

1:07

And to me, you know, algorithm is just a much better, you know, moniker for that

1:14

Blevins says AI can be used for a variety of tasks. But when it comes to news consumption

1:20

and reliable, verifiable information, it still has a long way to go

1:25

Well there seems to be all this praise about it and all this hype And I think there a real disconnect there It like yeah I seen Sora 2 in terms of how well it can make deepfake videos and they are really impressive But that a very different thing than when we talking about relying on you know information integrity

1:50

Blevins says AI can be a first step when researching news, politics, or current events

1:57

But it should never be the only source. source. Because as advanced as AI technology has become, it is still flawed. When we asked

2:05

ChatGPT who the president of the United States was, it responded Donald J. Trump, but showed a

2:11

picture of former President Joe Biden. When we asked who was in the photo it provided, it doubled

2:17

down, still calling it Trump. Even though it was wrong, ChatGPT, like other AI systems

2:23

carries a disclaimer that it can make mistakes. Google's Gemini-powered AI search results

2:29

display a similar reminder that they may include mistakes. And we should absolutely not be relying on it

2:37

Again, at best, it's a first source. And then actually, I would take issue with the word source

2:45

It might be a first step. What can it gather quickly? I need to be willing to take the next action steps

2:52

that go to different sources to try and verify that. Blevins also referred to a high-profile AI blunder

2:59

in the Chicago Sun-Times that was then shared in the Philadelphia Inquirer

3:05

A journalist was tasked with compiling a summer reading list, and the reporter had AI do the assignment

3:12

It mixed up names you know authors with titles you know had those mismatched You know sometimes the titles weren perfect and sometimes they were books that didn exist at all or authors that didn exist at all

3:27

So that's a pretty big miss. Blevins says using AI as a starting point would have been fine, but it should never have been the final product

3:36

So if we go back, for instance, to, you know, the Chicago Sun-Times freelancer, okay, could have put that in and then went and then fact-checked other sources, you know, to get that information

3:49

What concerns me is that it's likely to develop more bad habits

3:54

It's going to encourage us to be lazy, to simply take, you know, one source as it, right

4:02

When you ask AI systems to describe their own weaknesses in news gathering, they acknowledge a number of them

4:10

Take OpenAI's new search engine, Atlas. When we asked what challenges it faces in gathering and delivering news, it replied, short answer, everything

4:20

Long answer, buckle up. It then listed issues such as accuracy, information verification, and information overload, adding that there is more content than any human brain can handle

4:33

And when news outlets issue corrections or retractions, can AI keep up with those changes

4:39

Atlas told us, short answer, no, saying there was no indication it tracks or flags corrections to stories that it crawls

4:47

Even though we have this plethora now it has really eroded trust We had more trust in journalists the institution of journalism in the past with fewer sources than we do now And Blevins says that even though users can

5:04

customize these AI algorithms to fit their interests, including news, remember that AI

5:11

has its own interests too. I would say, you know, a bigger concern would be, you know, what is

5:19

you know, the algorithm really programmed to do. You know, what is, you know, there's a commercial

5:24

interest here, right? And that is to keep you engaged. So, you know, the longer that, you know

5:31

it can keep you in front of the screen, you know, clicking on items, making comments

5:37

sharing those, you know, those items, those are all, you know, signs of engagement

5:44

The latest Pew Research survey shows 62% of Americans interact with artificial intelligence at least once a week, making it a massive market that is rapidly growing

5:57

A big thank you to Jeffrey Blevins for taking the time to talk with us about AI and how to use it responsibly when consuming news

6:06

And related to this story, Straight Arrow News recently reported on an international study that found 45% of AI responses to news prompts had at least one significant issue, and 81% had some form of issue

6:22

You can find that story on our website or mobile app by searching AI News Report

6:28

For Straight Arrow News, I'm Cara Rucker

#news

#Politics

#Media Critics & Watchdogs

#Technology News