Features of Apache Spark

Show More Show Less View Video Transcript

0:00

in this video we are discussing features

0:02

of Apache spark and there are eight

0:05

different features we are going to

0:06

introduce to you so features of Apache

0:10

spark were having speed reusability

0:12

advanced analytics were having in memory

0:15

computing real-time stream processing

0:18

we're having lazy volition dynamic in

0:21

nature and also fault tolerance so these

0:24

are the eight different features spark

0:26

is having so let us discuss one by one

0:31

at was we are starting with the speed we

0:34

know that this spark is actually

0:37

lightning fast cluster computing

0:40

technology and it supports in-memory

0:42

competition so that's why it's

0:44

competition is hundred times faster than

0:48

the other technologies available so

0:50

Apache spark has a high data processing

0:53

speed by reducing the number of

0:55

read/write operations on disks it can be

0:58

hundred times faster in memory so

1:01

because there were minimized the

1:03

read/write operations on the disk so the

1:06

competitions are getting done in memory

1:08

so that's why it is hundred times faster

1:10

next one is the reusability spark codes

1:14

can be easily converted to batch

1:16

processing or join stream on historical

1:19

data and that's why it is having the

1:21

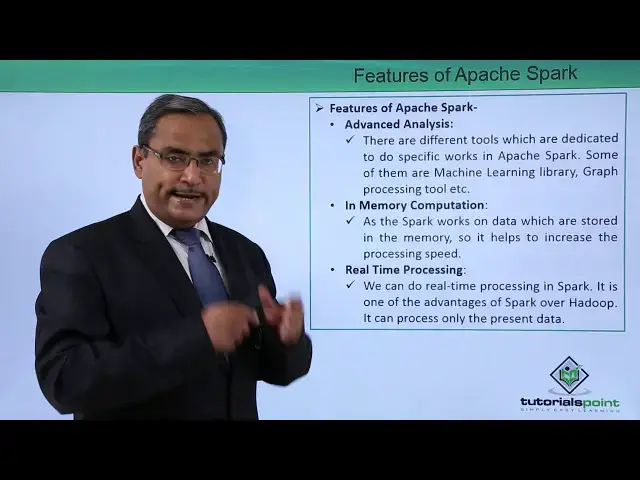

high reusability next one is the

1:27

advanced analysis advanced analysis

1:30

means there are different tools which

1:32

are dedicated to do specific walks in

1:36

Apache spark so in our in our Apache

1:39

spark components we have shown you that

1:40

in the ecosystem there are six different

1:42

components are there and those

1:44

components were those tools are

1:46

dedicated for different kinds of

1:49

analysis oriented activities so there

1:52

are different tools which are dedicated

1:53

to do specific works in Apache spark and

1:56

some of them are machine learning

1:58

library also known as ml Lib we're

2:01

having the graphics processing tool also

2:04

known as graph X and etc next one is

2:08

in-memory computation very important

2:10

feature at the SPARC works

2:12

on data which are stored in the memory

2:15

we know that memory accessing time will

2:17

be quite less compared to the disk

2:20

accessing time so as the competition is

2:22

getting done in to the memory that means

2:25

that data will be available in the

2:26

memory and during processing when the

2:28

intimated data will be produced

2:30

intermediate results will be produced

2:32

and they'll be also kept in the memory

2:34

so at the spark walks on data and which

2:37

are stored in the memory so it helps to

2:39

increase the processing speed next one

2:43

is the real-time processing we can do

2:45

real-time processing in SPARC it is one

2:48

of the advantages of spark over Hadoop

2:51

and it can process only the present data

2:54

so that means real-time processing means

2:56

depending upon the present input the

2:58

processing will be done the analysis

3:00

will be done and outputs will be

3:02

obtained next one is the Lizzie

3:06

evaluation the SPARC transformation does

3:09

not change the data on the go and only

3:12

when one action is triggered then only

3:14

that changes will be done on the actual

3:17

data so it is not it is not getting done

3:19

before initiation or before triggering

3:22

of any action when the action will be

3:25

triggered then only that respective

3:27

changes will be done on the actual data

3:29

next one is the dynamic in nature

3:32

so there are 80 high-level operations

3:35

are present in SPARC so eight T

3:36

high-level operations are present in

3:38

SPARC so we can build parallel

3:40

applications in it so applications will

3:43

be working in parallel and simultaneous

3:45

next one is about fault tolerance using

3:49

sparks code abstraction rdd's we can

3:52

handle different errors we know that in

3:55

case of a DD it is nothing but one data

3:57

structure where one data set will be

4:00

divided and will be distributed on to

4:02

multiple different servers as a result

4:05

of that the probability of data loss has

4:07

got reduced and that's why the system is

4:09

known as fault tolerance so it is it is

4:12

it reduces the data loss in our spark

4:16

system so these are the different eight

4:18

features of spie patches for which we

4:21

have discussed in this video thanks for

4:23

watching this video

#Programming