Spark Commands

Show More Show Less View Video Transcript

0:00

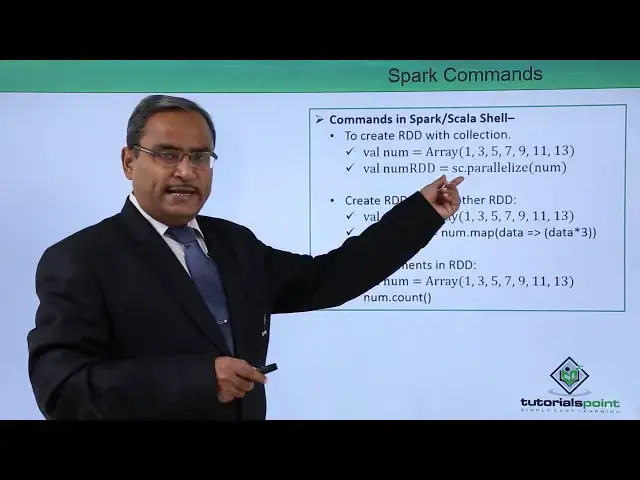

In this video we are discussing Spark commands

0:04

So this video will be assisted with one practical demonstration also for your understanding

0:10

So let us discuss some of the Spark commands which we use on very regular basis

0:15

So as the Spark is developed in Scala, so we have to use Spark commands in Spark shell and

0:23

this shell comes with the Scala prompt. So to start the Spark, we have to use the Spark

0:29

spark hyphen shell command so how to use this one how to invoke this one so at the dollar

0:36

prompt you are writing this spark location the respective path and then slash bin slash spark

0:42

hyphen shell then the scalar prompt will be obtained now to create a dd from a file so how to

0:49

create one oddddd from the file so well text file is equal to a c that is a spark on text

0:55

and here we are having dot text file and the file path so

0:59

using this command we can create one IDD one RD with a file and here the name of the file

1:05

with the path should be given within double quotes within this text file method as argument

1:13

next one is that to create a DD with collection so here we're having a collection of integers

1:19

so well numb is equal to array we're having the set of integers here well numb

1:25

odddd is equal to s c. . parallelize num. So, num we have defined here and that num will be passed as input argument to this method

1:35

Next one is create RDD from another RDT. So here we are having one collection, array collection

1:41

So well num is equal to array and the set of integers we have mentioned

1:45

So well, RDT copy is equal to num dot. So this is coming. Num

1:51

map now data implies data star 3 so in this way we can create one

1:58

odddddd from another odddd next count elements in so in that case well num is equal to array and set up integers are there and then

2:09

numb dot count method which will give you the count of elements in the

2:14

RDD next one we're going for the filter operation on RD. So, well, text file is equal to SC

2:23

. text file and the respective file name with the path will be passed as the input

2:28

parameter. So, val temp is equal to text file dot filter. So what is the filtering

2:34

criteria? Line implies line. Contents ABC. So all the lines will be filtered, which

2:41

will be having the text ABC there. Get first element from RDD. So valtex file is

2:48

to s c. text file and then the file path and then text file dot first method so it will

2:55

print the first element from the RDD next one is the count of number of partitions in

3:02

RDD in that case go for val text file is equal to SC dot text file then give the file with the

3:08

path as the input argument enclosed within double quotes and then text file dot partitions

3:14

length which will give you the count of number of partitions in RDD

3:20

So these are the different set of commands in Spark So let us go for one practical demonstration to show you better that how these commands are working In this demonstration we shall discuss how to execute multiple different Spark commands on our system

3:38

So at first, we're going to create one data file. So I'm just going for Walter Control T to open a new terminal here

3:46

So in this terminal we'll be working with. now see at first we're going to create one data file the name of the data file will be my

3:57

test file dot txt so I'm creating this one so keeping some text there this is a test file

4:07

we can create spark rddd with it spark can find number of lines

4:18

etc and then we shall go for some symbols so after that control d for save and exit

4:28

so let us see that what is the current content of this file so get my test file

4:34

txt okay so now I have typed it that is a cat my test file dot txt and we are

4:43

getting the file content so now we shall create some RDD so at first we're going to

4:47

initiate the spark shell so we know that in that case we're supposed to execute spark

4:54

hyphen shell and then the spark shell will get loaded and the scalar prompt

5:01

will come so at first we shall create spark rddd using this created data file

5:11

so the command will be val my file you can also go for my

5:17

file rdd is equal to SC there is a spark context we are going for text file and then we are

5:27

giving the path that is slash home slash big data slash my test file dot txt so my test file

5:38

txt double code complete and bracket closing so i'm creating i have created my file

5:43

RD so this particular RD we are creating from the created data file so in this

5:52

we have created now we can go for my file rddd collect then the current content

6:03

will be shown in the form of an array you can find that these are respective

6:07

array content otherwise there is another very smart way to print it so I shall go

6:11

for my file my file rddd dot for each then print ln so it will read all the lines of the file

6:22

and they will get printed you see here we're having the respective content

6:28

so that is our RDD we have created directly from the file and also we have

6:33

printed the file content now let us create one RDD from an array so in that

6:39

case the command will be val say my array so I going for this my array is equal to array at first I defining one array here is equal to array say one comma in this way i done how many data we have kept in the array so seven okay so array has got created now we shall

7:00

go for say val new rdddd so we are creating one rddd from this array so it will be spark context

7:13

dot parallelize my array so we shall go for this new rddd

7:23

collect so you can see in the array format it has been shown now also we

7:31

can print the same using for each so new rdddd dot forage so new rdddd dot forage so

7:38

new rddddd dot for each print elaine so here you can find that they have got printed so all this data they have got

7:50

printed in separate lines so that is the array from the array we have created one

7:55

RDD okay now copy and update each elements of the array RDD so how to do that

8:01

one we shall go for say val update RDD is equal to new rdddd dot map new

8:13

RDD dot map the new RDT we created earlier so new RDtt dot map element element and the new

8:24

value of the element will be say element say element star say 10 say plus 5 so giving one

8:35

RPD expression so now it has got updated so let us go for the print so that is

8:43

update RDD so update RDD dot for each print LN so if you go for this so it

8:54

will read all the lines of the RDD and they'll get printed you can find

8:57

that it has got printed in this way it has got printed in this way why it is

9:02

coming as 15 because the first value was one 1 1 in to 10 10 plus 5 so that is a 15

9:08

second value was 3 so 3 into 10 30 plus 5 that is a 13

9:13

35 the next value was 5 so 5 into 10 50 plus 5 so that is 55 in this way you can find that

9:19

this RDT content also we have changed and here you have used the method that is the

9:26

respective command will be map so now we shall go for we shall count the number of

9:32

elements in the RDD so that can be counted in this way you can go for say new

9:37

RDD new RDD is equal to dot count we can call it in this

9:43

this way so you can find we are having the seven we had the seven values there so

9:48

that's why the value seven is getting printed now we shall go for this my file

9:53

rdddd count so we're finding four because we're having the four lines in the

10:01

respective file we had the four lines in the respective file so that's why the count is

10:06

also showing four here now we shall filter those lines from the file or ddd

10:12

where the given word is found so let us go for the filtering so you shall filter those lines from the file or dd where the given word is found so let us go for the filtering so we shall filter those lines from the file rddd where the given word is found so for that one i going for say val

10:25

and say filter so going for val filter filter text filter text files so is equal to my file

10:38

so my file rdddd dot filter so let us suppose I'm going for each and every line

10:44

will get printed and where the line will contain contains the word spark

10:53

so just see the command so here we're having say Val filter text file is

10:59

equal to my file rdddddd where each and every line those lines will be coming

11:05

which line that that very line which is containing the word spark so we have written

11:10

so line implies line dot contains spark so that is a command so we are going for

11:18

the execution so now let me go for the filter text file filter text file

11:27

dot for each print align so if you go on executing we can find that only those

11:34

two lines are coming out only those two lines are coming out which is having this

11:39

we can create spark RDD with it so spark what what is there and spark and

11:45

spark and find number of lines etc so that is the spark what is also there so

11:51

in this way we have gone through this so that is the filter text file dot for each

11:56

print ellen so in this we can easily find that how we are creating so all these

12:01

things so if I go for only for this forage okay I'm going for this I'm going for this as forage so let me see the

12:13

file name here so file name is is my file RDD okay so the my file RDD so if I go for

12:20

this my file RDD dot forage printallant in that case that you see we're having

12:28

these four lines out of these four lines only two lines are having the word spark in it

12:33

so that's why those two lines got printed so now if I print the first line of the

12:39

text RDD in that case what will happen we shall go for my file rdddd dot fast my file

12:49

RDD dot first so I think this particular file will be a f will be in the

12:55

capital letter yes so you can find that only the first line is getting printed

13:00

so in this way you can find that we can do a multiple different options

13:03

operations here using the spark commands we are going for find the size of the text file

13:12

partition find the size of the text file partition so let us go for say mine file rdddd

13:18

dot partition partition so partitions dot length so you see it is giving that the

13:28

partition length is one only so in this way in this demonstration we have shown

13:33

that what are the different commands with the help of which we can work with a spark

13:39

environment in the scalar prompt thanks for watching this video

#Programming

#Programming

#Software