MapReduce and Design Patterns - Average MapReduce

https://www.tutorialspoint.com/market/index.asp

Get Extra 10% OFF on all courses, Ebooks, and prime packs, USE CODE: YOUTUBE10

Show More Show Less View Video Transcript

0:00

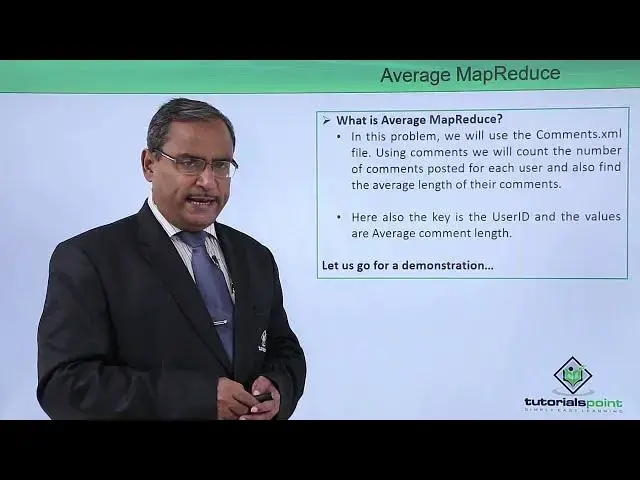

In this video we are discussing average map reduce

0:04

Now what is average map produce? So in this problem we will use comments.xml

0:10

So we are having another XML there. There is a comments.comels.coml. And this comments dot XML file will be used in this average map reduce

0:19

Using comments, we will count the number of comments posted on each user for each user and

0:25

also find the average length of their comments. So that means it will count the number of comments made by each user and the average length

0:35

of the comment. So that will get calculated here. So here also the key is the user ID and the values are average comment length

0:43

So that will be the output will be going to get. So let us go for one practical demonstration for the easy understanding of this concept

0:52

So here we are implementing the average count MR task which is falling under the

0:59

summarization design pattern comments dot XML under the folder slash input slash comments so under this folder this comments dot XML is a file which is our

1:09

input file so let us see that what is the current content of this comments dot XML

1:15

we're having a huge content it is XML file so we are having a huge content but here

1:20

we have shown a part of that so under the comment tags we're having the

1:24

row tags and row is having ID the post ID the score and the respective comment whatever he or she has made the respective creation date and also the user ID

1:37

so these are the fields are there under the row tag in this way we're having several such row tags are there under this comment

1:45

under this comments tag so this is our contents of comments.xml so here we are going to have our two

1:53

Java programs one is the average count data. Java and another one is the average count MR task

1:58

Java so in case of average count data implements the writable implements the

2:04

writeable interface it is having the constructor and there is the average count data

2:09

it is having some getter and setter methods and two methods are also there

2:14

one is a read fields and another one is the right fields the member variables are

2:18

here the common count and the average length so these are to the member variables and within the constructor we have initialized them these are the respective gator and setter methods that is a get comment count get average length set comment count set average length

2:33

We're having the read fields right and also we have overwritten the method that is two string

2:39

So this average length you are finding here we are just initializing that one in the set average length

2:45

In the average count MR task which is the main Java file here, which is having a very

2:50

is having the respective mapper which is having one inner class that is the average

2:55

count mapper which is implement or that inheriting the mapper class and also

3:02

we're having the reducer class has got inherited by average count reducer so within

3:08

this average count mapper we're having the text type of that is OP user ID and

3:14

average count data objects are there two member variables and also we have

3:18

overriding the map method XML to map is one method we have written which will be

3:24

converting this XML content to the hash map type so it is calculating the x a

3:30

XML key and so on so that XML to map the method whatever will be returned

3:36

will be kept in XML parsed and XML parts dot get user ID XML parts dot get

3:42

text that will be kept in user and comment text so within the tri-block we are

3:48

just initialize this set comment count as one set average length will be the

3:53

comment text dot length so initially they it will be initialized and then op user

3:59

ID dot set user and then we're writing the context that is the op user ID and

4:04

the OV OP average count value so these two values will be written as a key value

4:10

pair on the context so that it has been kept within the tri-block in the

4:15

reducer we're having this this reducer class has been inherited by average count reducer which is overriding the method

4:23

reduce here which is overriding the method reduce we're having the common

4:26

count is equal to zero and common length sum is equal to zero also so in the

4:31

average count data for each average count data into values we're just writing

4:37

common count plus equal to data dot get common count so common count will get

4:41

increased and also the count length will be increased by the data dot get average length into the data dot comment count so comment count and the average length will be multiplied and that will the product does obtain will increase the count

4:56

length sum and then we are just setting we are using the set methods that is a set

5:01

comment count and set average length so with these values will be going for the set

5:06

and there is a count length sum by comment count and that we are going to write as a key

5:12

value pair onto the context so also we have discussed this XML to map already we have discussed so and you know that in

5:22

this way we are having the main method here the main method requires two

5:25

arguments so if two arguments have not been passed so it will cause some

5:29

error and it will exit system exits two and then if it first argument will be

5:34

accessed as a are g s zero and the second argument will be accessed as air g s 1

5:40

so that will initialize the that will take the file input format and the file output

5:46

so that will be kept and the set mapper and the set reducer class will be

5:50

initialized accordingly whatever you have discussed whatever you have discussed earlier that is inheriting the mapper and the reducer class and then we'll

5:59

be going for job dot set output key and then job dot set output a value class so

6:05

these two values will be updated and then depending upon the success the exit

6:10

zero or exit one will take place so it will it will be the bullion one there so

6:15

now let me go for the execution of these two Java classes so before going we

6:21

shall create the jar file so how to create just on this particular package name

6:26

go for right button click and then go for export and then jar then we shall be

6:31

giving the proper name and the folder and then you shall go for next and finish

6:35

but already we have created the jar so I'm not going to finish that steps but these

6:39

are the steps to be followed let me show that how the command has to be

6:44

issued so Hadoop jar that is a map produce design pattern jar files is a

6:50

respective folder and then summarization patterns dot jar and then we are having

6:55

the average count that is a package name and then average count MR tax is

7:01

the respective class name and then under the input comments within this folder

7:06

we'll be having the input file that is comments dot XML and slash output will be the

7:10

output folder on in which the part files will be will be will be created after the running of this comment so let us run this comment at first I not finding the success

7:37

the name node is name node server is now in the safe state

7:41

so let me make it come out from the safe state so will be for the name node will

7:47

be I'm going to retrieve the name note getting out from the safe state so the

7:52

command will be how do there is a DFS admin then minus safe mode then leave so

8:03

now I think it is okay now let me execute the command once again so if I get

8:08

success then under the output folder the parts file will be created which will be containing the respective output yes we

8:18

have got the output file created so let me check here so comments dot XML is input

8:23

input folder was there and now we are going for the output folder and

8:28

there we're getting this part hyphen r hyphen zero so here is a part file only a

8:34

single part file has got created so here the output has been stored so let me go

8:39

for the console back again and let me go for the sdf sdf s command with minus cat option to

8:50

see this to look at this part file content so slash output is a path name and then part

8:59

star so all the files starting with the part its content will be printed so there is

9:07

the respective content of the part file we're having the ID then we're having

9:11

the comment count and how many comments he or she has made and then we'll be

9:15

having the average comment length so for 4 to 2.5 is a comment length so in

9:22

this way you are finding a rest of the files content rest of the content of the part

9:26

file so let me delete this output folder because the other map produce programs

9:31

will be accessing the same folder there so let me delete that one it is not mandatory but for the next execution

9:37

So, these are delete comment

#Programming